Is Your Business Ready for AI? 7 Signals You Can Start Now

Not every business is ready for AI. Here's how to know if yours is — with a practical readiness assessment based on real deployments.

By Mike Hodgen

Most Businesses Aren't Ready for AI (And That's Fine)

I watched a $5M logistics company spend six months and $80K trying to implement an AI chatbot for customer service. The problem? Their order data was split across three systems that didn't talk to each other, their process documentation existed only in the heads of two veteran ops managers, and nobody had time to train the AI on actual customer scenarios. The chatbot launched, gave terrible answers, and was quietly killed three weeks later.

AI Readiness Spectrum

AI Readiness Spectrum

The AI hype cycle makes every business owner feel like they're falling behind. You're not. Most companies aren't ready for AI, and that's completely fine.

Here's the truth: is my business ready for AI isn't a yes/no question. It's a spectrum. I've built 15+ AI systems at my DTC fashion brand, and before we started, we had 6 of the 7 readiness signals I'm about to walk through. That wasn't luck — it was foundational work we'd done over years that happened to position us perfectly for AI.

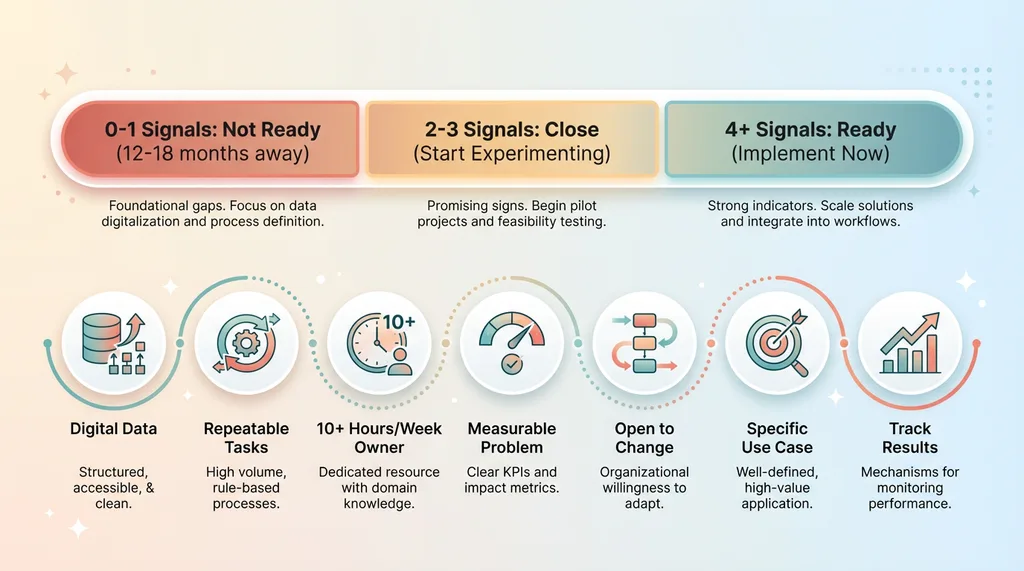

This article gives you 7 concrete signals of AI readiness. If you have 4 or more, you're ready to start. If you have 2-3, you're close but should shore up fundamentals first. If you have 0-1, you'll waste money and time trying to force it.

I'm going to be direct about what makes a business ready and what doesn't. Some of you will finish this article knowing you should wait six months. That's a good outcome — it'll save you from becoming another cautionary tale.

Signal 1: You Have Digital Data (Not Just Paper or Tribal Knowledge)

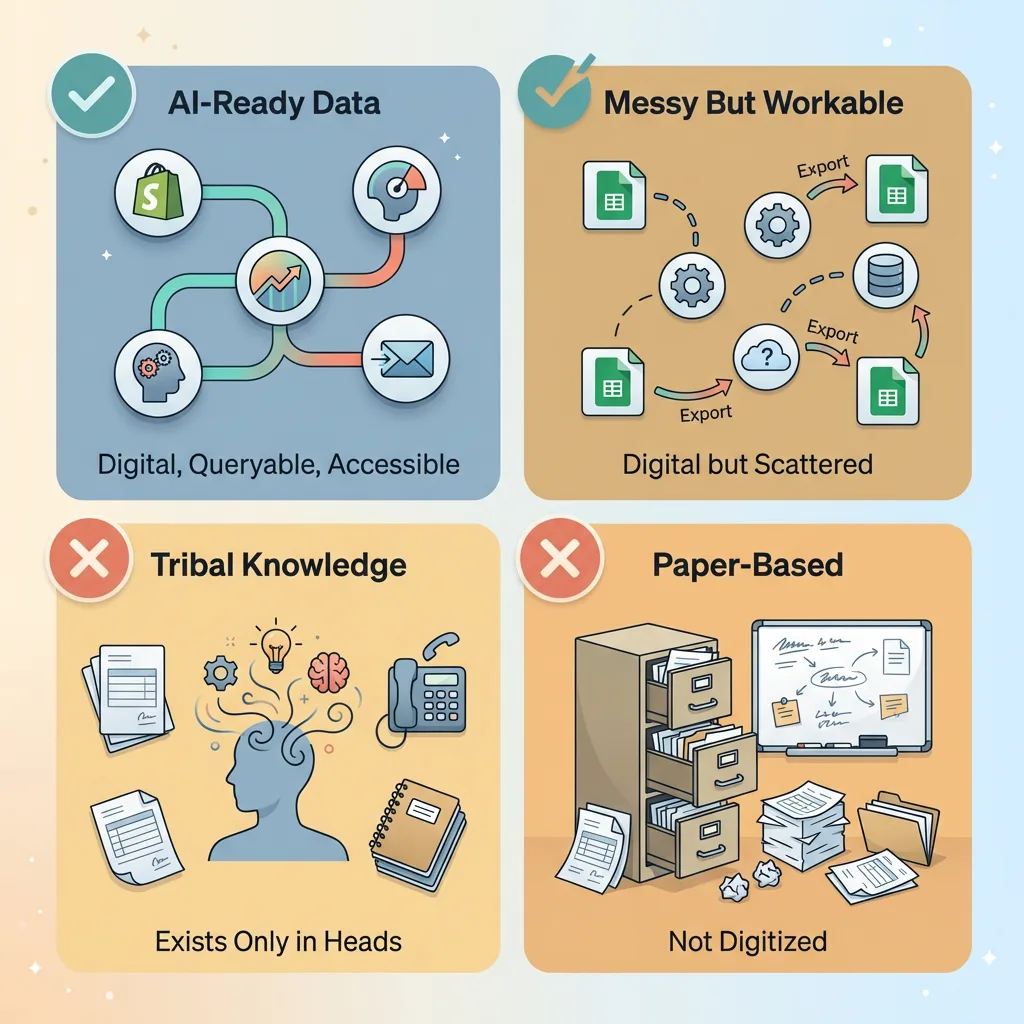

AI needs data to learn from and act on. Not perfect data. Not clean data. Not even well-organized data. But it has to exist in digital form somewhere.

Digital Data Foundation Types

Digital Data Foundation Types

At the brand, we had a messy but functional digital foundation: product data in Shopify, sales history in Google Analytics, customer service conversations in Gorgias, email sequences in Klaviyo, financial data in QuickBooks. None of it was perfectly integrated. Some of it required manual exports. But every critical business function left a digital trail that AI could access and analyze.

That's the minimum bar.

Compare that to a manufacturing company I consulted with last year. Their production schedules lived on whiteboards. Order details came through phone calls and were written on paper forms. Quality control data was stored in filing cabinets. Their best production manager had 20 years of expertise and could tell you the optimal settings for any job — but none of that knowledge existed anywhere except his brain.

We couldn't implement AI there. Not without spending 6-12 months digitizing everything first.

You don't need a sophisticated data warehouse or pristine data architecture. I've worked with plenty of businesses where data is scattered across Google Sheets, multiple Shopify stores, various email platforms, and half-implemented CRMs. Messy is fine. Queryable is essential.

The test: Can you pull up last month's sales by product category? Do you have customer service conversations stored anywhere? Can you export your product catalog? If you're nodding yes to questions like these, you have digital data.

If your answer is "I'd have to ask Janet, she keeps track of that stuff" — you have tribal knowledge, not data. That's a fundamental business problem that needs fixing before AI enters the picture.

The good news? Digitizing operations is valuable whether you implement AI or not. Better systems, clearer processes, actual documentation — these pay for themselves in reduced errors and easier employee onboarding. AI readiness assessment starts here because this work creates value immediately.

Signal 2: You're Spending Too Much Time on Repeatable Tasks

AI excels at one thing above all others: doing the same task over and over, faster than humans, with consistent quality.

If your team is copying data between systems, writing similar product descriptions, resizing images for different platforms, generating weekly reports by hand, categorizing transactions, responding to routine customer questions — you're sitting on an AI goldmine.

At the brand, we were spending 45 minutes per product on descriptions and SEO metadata. Not because we were slow, but because thorough product content takes time. Write the description. Write the meta description. Pick keywords. Generate alt text for images. Multiply that by 564 products and you're looking at 420+ hours of work.

Now? Three minutes per product. The AI pipeline generates descriptions, SEO metadata, and alt text based on product attributes and images. I review the output, make tweaks if needed, and publish. We still maintain quality — actually, our SEO performance improved — but we've reclaimed hundreds of hours.

Other examples from our operations: SEO meta descriptions used to be written manually for each of our 313 blog articles. Now they're generated automatically when articles publish. Image processing for different marketplace requirements (Amazon wants different specs than Etsy) was a manual export-resize-upload loop. Now it's automated.

The pattern? If we did it more than 10 times a month, I looked at whether AI could handle it.

Contrast that with truly one-off work. Negotiating a partnership deal. Handling a complex customer escalation. Designing a brand-new product category from scratch. These aren't AI-ready because they don't follow patterns. They require judgment, relationship skills, and creative problem-solving that doesn't map to "input X, output Y."

This is where what a Chief AI Officer does becomes critical. Someone needs to walk through your operations and identify which repeatable tasks are worth automating first. High volume, clear input/output, measurable time savings — those are the winners.

If you're reading this and mentally tallying up 5-10 tasks your team does over and over, you have this signal. If your work feels genuinely unique every single time, AI will have less immediate impact.

Signal 3: Someone on Your Team Has 10+ Hours a Week to Own This

This is the signal most businesses miss.

AI Implementation Time Commitment Curve

AI Implementation Time Commitment Curve

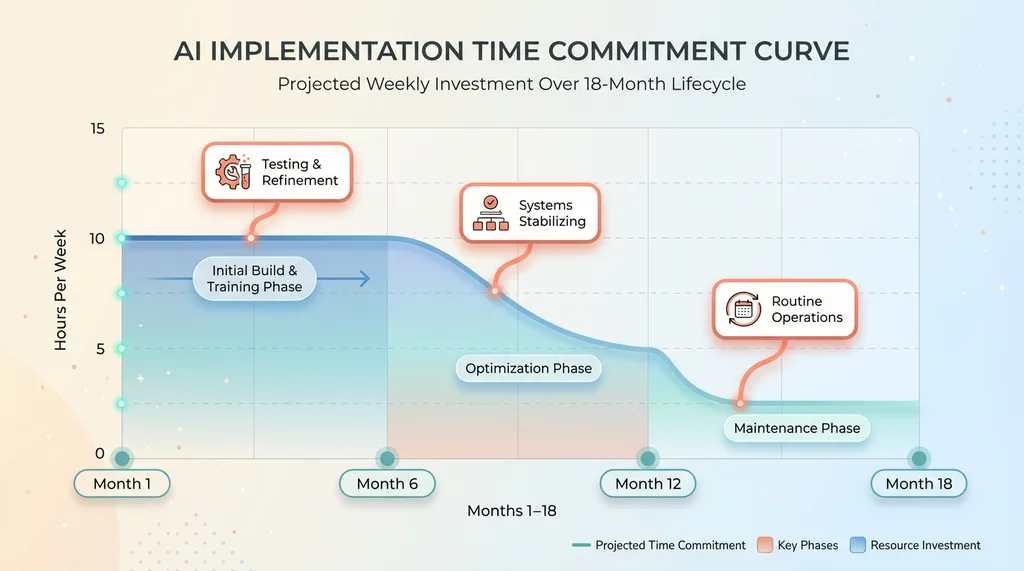

AI isn't "set and forget." Even when you have someone like me building the systems, someone internal needs to own the day-to-day: reviewing AI outputs, providing context it's missing, refining prompts, spotting edge cases, and giving feedback on what's working.

I spent about 10 hours a week on AI systems during the first six months at the brand. I'm the CEO. I wasn't coding (though I do write Python), but I was deeply engaged: testing product descriptions, tweaking the pricing algorithms, training the system on our brand voice, reviewing SEO output.

Now, 18 months in? I spend 2-3 hours a week on AI operations. The systems are stable. But those initial hours were non-negotiable.

If everyone on your team is running at 110% capacity with zero margin for new projects, AI will stall. It'll get deprioritized. The systems will sit unused. I've seen it happen.

Last quarter, I had a call with a potential client — $8M revenue, 15 employees, solid business. The COO wanted AI for customer service and content generation. Legitimate use cases. But when I asked who would own the implementation day-to-day, there was silence.

"Everyone's already maxed out," she admitted. "We were hoping AI would just... work."

I told her to hire another ops person first, then call me back in three months. She wasn't happy, but it was the right answer. Trying to implement AI when nobody has bandwidth is like trying to renovate your house while you're drowning in urgent repairs. Fix the urgent stuff first.

This isn't about technical skill. The person who owns AI doesn't need to code. They need curiosity, attention to detail, and time. If you have someone who fits that description and can carve out 10 hours a week for 3-4 months, you have this signal.

If you don't, you're not ready yet — and that's fine. Bandwidth is fixable.

Signal 4: You Can Measure the Problem You're Trying to Solve

If you can't quantify the pain, you can't quantify the ROI.

Before we implemented AI at the brand, I knew exactly how long product creation took: 3-4 hours per product from concept to published. I knew our customer service response time hovered around 2-3 hours. I knew we were spending $12K/year on freelance writers for blog content.

These weren't sophisticated metrics. I didn't have dashboards or time-tracking software. But I had baselines. When we implemented AI, I could measure impact: product creation dropped to 20 minutes. Customer service response improved. Content costs dropped to near-zero while volume increased.

The results I share publicly — +38% revenue per employee, -42% manual operations time, 3,000+ hours saved annually — aren't perfect calculations. There's estimation involved. But they're real numbers tied to real processes.

If a business owner tells me "we spend too much time on data entry," that's not measurable. If they tell me "our billing team spends 15 hours a week manually reconciling invoices between systems," now we're talking. I can measure 15 hours. I can calculate what 15 hours costs. I can build an AI system and measure whether it actually saves time.

This signal trips up a lot of SMBs because many have never formally measured their processes. You just know things take too long. That's not enough.

The fix is simple but requires discipline: pick one process you think AI could help with, and measure it for two weeks. How long does each instance take? How many times do you do it? What's the error rate? Write it down.

If you're reading this and thinking "yes, I know exactly how much we spend on X" or "I could tell you how many hours we waste on Y" — you have this signal. If you're thinking "I have no idea how long that actually takes us" — spend a month measuring before you think about AI.

Business ready for AI implementation means having enough operational maturity to measure your own processes. If you can't measure it, you can't improve it — AI or otherwise.

Signal 5: You're Open to Changing How Work Gets Done

AI isn't a plugin for existing workflows. It reshapes them.

At the brand, our product creation process fundamentally changed. We used to design products, THEN write descriptions. Now the AI generates descriptions during design validation — we use the outputs to test whether a product concept is clear and compelling before we commit to production. If the AI can't write a good description from the mockup, the product concept probably needs work.

That's a process change. Some team members had to learn new tools. We adjusted our quality checkpoints. We redefined what "done" looks like for a product launch.

If your reaction to "we'll need to change how this process works" is resistance — "we've always done it this way" or "our clients expect X" — AI will fail. Not because the technology doesn't work, but because you'll implement it as a bolt-on to existing processes, where it'll create more work instead of less.

I worked with a creative agency last year where the founder wanted AI for content generation but refused to let it touch his "sacred" creative process. Fine. We built AI for everything around it instead: SEO optimization, meta descriptions, social media reformatting, image processing, client reporting.

It worked. We saved his team 12+ hours a week. But we could have saved 30 if he'd been willing to let AI draft initial concepts for his writers to refine, instead of insisting humans do first drafts. His choice — and a legitimate one — but it limited impact.

This is a psychological readiness check, not a technical one.

Leaders who view AI as a collaborative tool — something that handles the tedious parts so humans can focus on high-value work — get results. Leaders who view AI as either a threat (it'll replace us) or a magic wand (it'll fix everything without us changing anything) hit walls.

At the brand, I'm the CEO and I use AI every single day. It doesn't threaten my role. It amplifies it. I spend less time on repetitive tasks and more time on strategy, partnerships, and creative direction. But that only works because I was willing to change how I work.

If you're open to process changes — even uncomfortable ones — you have this signal. If the idea of changing established workflows makes you defensive, you might not be ready yet. AI adoption readiness is as much about mindset as capability.

Signal 6: You Have a Specific Use Case in Mind (Not Just 'We Need AI')

The worst AI projects start with: "Everyone's talking about AI. We should probably do something."

Good vs. Bad AI Use Cases

Good vs. Bad AI Use Cases

The best start with: "We spend 15 hours a week on X, and I think AI could do it."

My first AI project at the brand was product descriptions. Why that one? High volume (we had 400+ products at the time, now 564). Clear input and output (product attributes and images in, description and SEO metadata out). Measurable time savings (45 minutes to 3 minutes per product). Immediate ROI.

I didn't start with "implement AI across the business." I started with one specific, high-impact use case.

Contrast that with vague goals like "use AI to improve customer experience." What does that mean? Faster response times? Better product recommendations? Personalized marketing? All of those are possible, but "improve customer experience" isn't actionable. You can't build a system around it.

Good starting use cases for SMBs typically fall into a few categories:

Content generation: Product descriptions, blog articles, SEO metadata, social media posts, email sequences. High volume, pattern-based, time-consuming.

Dynamic pricing: If you have 50+ products and change prices manually, AI can monitor competitors, factor in demand patterns, and optimize pricing automatically. We do this for 564 products at the brand with a 4-tier ABC classification system.

Customer service triage: Not replacing humans, but routing questions, suggesting responses, and handling FAQ-level inquiries so your team focuses on complex issues.

Data processing: Report generation, image resizing, data entry between systems, categorization tasks. Anything that follows clear rules but requires human time.

If you read that list and thought "yes, we have exactly that problem" — you have this signal. If you're thinking "AI sounds cool but I don't know what we'd use it for," you should wait.

AI for SMB success stories start with specificity. I can't tell you how many discovery calls I've had where a CEO says they want AI but can't articulate what for. When I ask "what's the first process you'd want to automate?" and get a blank stare, we're not ready to work together yet.

You don't need to know the technical implementation. That's my job. But you need to know the problem you're solving.

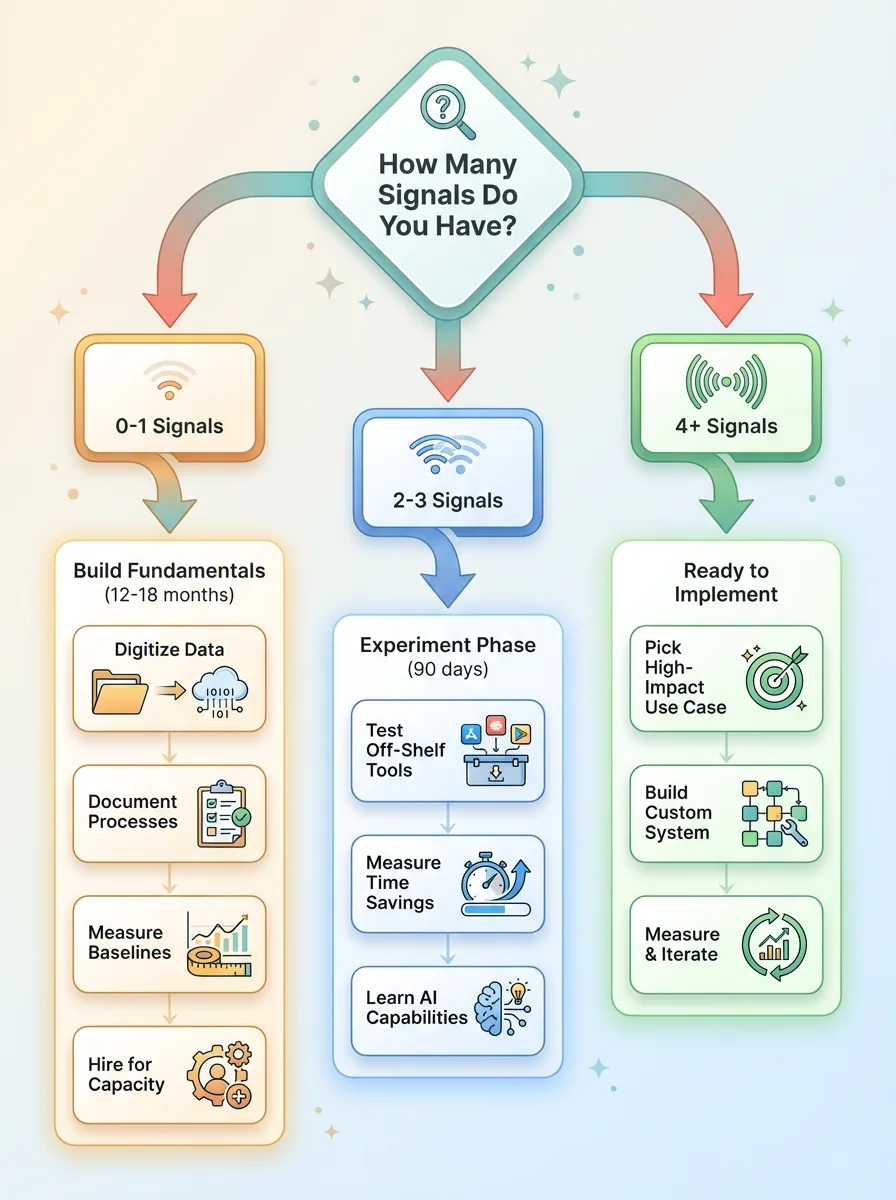

What to Do If You're Not Ready Yet

Three Readiness Paths Decision Tree

Three Readiness Paths Decision Tree

If You Have 0-1 Signals

Focus on fundamentals, not AI. You're probably 12-18 months away from AI being worth the investment.

Digitize your data. Move critical information out of heads and filing cabinets into systems. Even spreadsheets are better than paper. Even basic CRMs are better than email inboxes. This work pays for itself in reduced errors and easier knowledge transfer.

Document your processes. Write down how things get done. Not formal SOPs necessarily, but clear enough that a new employee could follow them. If you can't document a process, you can't automate it.

Measure your baselines. Start tracking time on key tasks. How long does X take? How much does Y cost? You need these numbers whether you implement AI or not.

Hire for capacity. If everyone's at 110%, you don't have margin for new initiatives. AI or otherwise. Add bandwidth before you add technology.

Don't feel bad about being here. Most businesses are. Use this time to build operational maturity that'll serve you whether AI ever enters the picture.

If You Have 2-3 Signals

You're close. You can start experimenting without major investment.

Pick one repeatable task — something you do 20+ times a month. Measure how long it takes now. Then spend 5 hours a week for a month testing off-the-shelf AI tools on it. ChatGPT, Claude, Gemini, Zapier, Make — there are dozens of low-cost options.

You're not trying to build production systems yet. You're learning how AI thinks, what it's good at, where it fails. This is research.

One client I advised last year had 3 signals. I told him to experiment for 90 days before hiring anyone or making big investments. He used ChatGPT to generate first drafts of customer emails, Claude to write product descriptions, and Zapier to connect his CRM to his email platform.

Three months later, he'd saved his team 8 hours a week, learned what AI could and couldn't do for his business, and had clear ideas for the next phase. Then we talked about custom systems.

You might not need a Chief AI Officer yet. But you can start learning. When you hit 4-5 signals, you'll know exactly what to build first.

For context on different implementation paths, I wrote about how different AI roles compare — consultant vs. fractional AI strategist vs. full Chief AI Officer. Where you are on the readiness spectrum should inform who you work with.

If You Have 4+ Signals

You're ready. The question isn't whether to implement AI, but how.

Your options: hire an in-house AI engineer (expensive, slow to ramp), work with a consultant (variable results, often too theoretical), or bring on a Chief AI Officer who builds systems for your specific business.

I'm biased, obviously, but here's my take: if you have 5+ signals and a specific use case, you're exactly the type of business I work with. You don't need workshops or strategy decks. You need someone to build the thing, measure the results, and iterate.

Start with one high-impact use case. Build it in 4-8 weeks. Measure results. Then expand to the next one. That's how we went from one AI system at the brand to 15+ systems running in production.

How I Help Businesses That Are Ready

I don't sell AI software. I don't run workshops. I don't deliver strategy presentations.

I build custom AI systems that solve specific, measurable problems for your business. Then I measure the results in dollars and hours saved.

Here's what a typical engagement looks like: You have a clear use case (product content, dynamic pricing, operations automation, whatever). We start there. I spend 4-8 weeks building a custom system using the same multi-LLM architecture I use at the brand — Claude for content quality, Gemini for image processing, custom Python for orchestration and cost efficiency.

You don't need to understand the tech stack. You need to see results. Time saved. Costs reduced. Revenue per employee improving. The same measurable outcomes we achieved at the brand: +38% revenue per employee, -42% manual operations time, 3,000+ hours saved annually.

I've built 15+ AI systems now. I know what works and what wastes time. I know when to use off-the-shelf tools and when to write custom code. I've written 22,000+ lines of Python specifically for business AI automation. This isn't theoretical — it's production systems processing real work every day.

My Chief AI Officer model is simple: I work with 3-5 companies at a time. I'm selective about fit because I actually build the systems myself — I'm not managing a team or delegating to junior engineers. If you're ready, I can get you results in weeks, not quarters.

If you recognized 5+ signals in this article and have a specific process you want to automate, let's talk. I'll review your situation and tell you honestly whether AI makes sense for you right now. Sometimes the answer is yes. Sometimes it's "come back in six months."

Either way, you'll know where you stand.

Ready to Bring AI Leadership Into Your Company?

I work with a small number of companies at a time because I build the systems personally, not delegate them. If you're serious about AI — not just interested, but ready to commit bandwidth and budget to doing it right — apply to work together and I'll review your application personally.

I'll tell you honestly whether we're a fit. If we are, we'll start with one high-impact system and measure results. If we're not, I'll tell you what to fix first.

Get AI insights for business leaders

Practical AI strategy from someone who built the systems — not just studied them. No spam, no fluff.

Ready to automate your growth?

Book a free 30-minute strategy call with Hodgen.AI.

Book a Strategy Call