I Automated Blog Writing With AI Agents (You're Reading the Proof)

How a 6-agent AI blog automation pipeline creates high-quality articles for $0.10 each. Real architecture, real costs, real results.

By Mike Hodgen

I review every article this system generates. Including this one.

That might seem weird — why build an AI blog automation system if you still have to check the output? Because the goal isn't to eliminate humans from content creation. It's to eliminate the 90% of the writing process that doesn't require human judgment.

This article is number 313 generated by my six-agent pipeline. I deployed it four months ago. Before that, I was writing maybe 1-2 blog posts per month when I had time. Now we publish 12-16 articles monthly at the brand, and the quality metrics are on par with what I wrote manually.

The difference between this and the AI slop flooding the internet: specialized prompts, a 3,400-word brand voice file, multi-agent review architecture, and a human approval gate. Every article gets 10-15 minutes of my time before it goes live. If something's off strategically or doesn't sound like me, it doesn't publish.

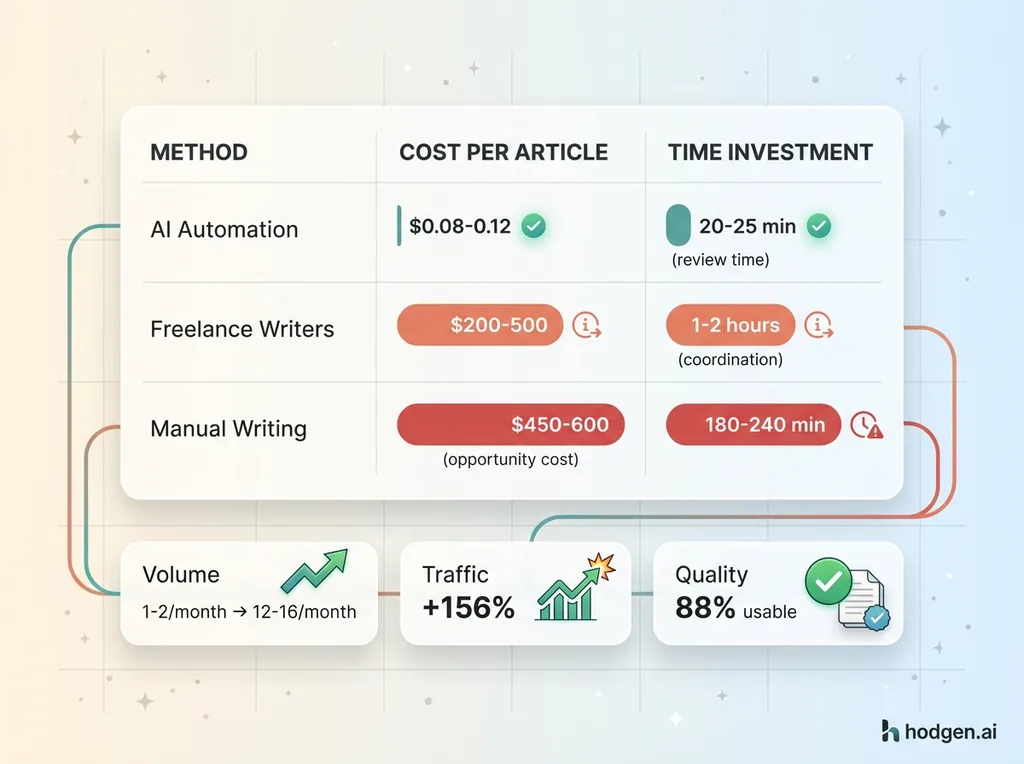

Cost per article: $0.08-0.12. Compare that to $200-500 for freelance writers or the 3-4 hours of founder time I used to spend writing these myself. At $150/hour, that's $450-600 per article in opportunity cost.

Building this kind of AI content pipeline is exactly what a Chief AI Officer actually does — designing systems that amplify human judgment rather than replace it. Content is still king for SEO, but manual creation doesn't scale. This does.

The 6-Agent Architecture: How the Pipeline Actually Works

Most people think AI blog writing is one big prompt. Wrong. It's a chain of specialized agents, each handling a specific part of the workflow.

Agent 1: The Strategist (Topic Selection & Keyword Research)

The Strategist analyzes keyword gaps using SEMrush data and Google Search Console exports. It looks at what competitors rank for, identifies topics where we have expertise but no content, and prioritizes based on search volume and business goals.

It doesn't just pick popular keywords. It evaluates whether a topic fits our brand voice and serves a specific funnel stage. A high-volume keyword that attracts the wrong audience is worthless. The Strategist generates a strategic brief: target keyword, secondary keywords, competitive landscape, recommended angle, and suggested word count.

This agent runs weekly. It maintains a backlog of 40-50 vetted topics ready for the Writer.

Agent 2: The Writer (First Draft Generation)

The Writer takes the strategic brief and generates 1,800-2,200 word first drafts. It uses Claude Opus because nuanced writing requires better reasoning than cheaper models can deliver. I tested GPT-4, Gemini Pro, and Claude across 50 articles. Claude had the lowest rate of AI tells and best voice consistency.

The Writer has access to the brand voice file — 3,400 words documenting my actual writing patterns, forbidden phrases, sentence length targets, and how I handle technical topics. It also has negative examples: a file of generic AI slop patterns to avoid.

It doesn't try to sound like me through vague instructions like "write conversationally." It follows specific rules: vary sentence length, start 0% of paragraphs with "In today's...", use concrete numbers not vague claims, acknowledge limitations honestly.

Draft generation takes 4-6 minutes.

Agent 3: The Editor (Quality Control & Voice Alignment)

The Editor is the quality gate. It checks drafts against editorial criteria: Does this sound like Mike? Are factual claims accurate against our knowledge base? Any AI tells that slipped through?

This is where AI quality control systems earn their keep. The Editor flags generic phrases, clichés, unsupported claims, and voice drift. It compares against the brand voice file and scores alignment on a 1-10 scale. Anything below 7 gets flagged for revision.

It also verifies facts. If the Writer claims "38% revenue increase," the Editor checks that against our actual metrics. AI hallucination is less common with Claude Opus but still happens. The Editor catches it.

This agent adds 2-3 minutes to the pipeline but prevents most of the garbage from reaching human review.

Agent 4: The Visual Analyst (Image Requirements)

The Visual Analyst determines where images actually add value versus decorative filler. Not every article needs visuals. Some benefit from custom diagrams, others from concept illustrations, some from data visualizations.

It specifies exact requirements: "Blog header image: abstract representation of AI agents collaborating, minimalist style, 1200x630." Or: "Data visualization: bar chart comparing cost per article across manual writing, freelance, and AI automation."

This prevents the common mistake of slapping stock photos on every article because you feel like you should. Images should serve the content, not decorate it.

Agent 5: The Image Generator (Visual Assets)

The Image Generator creates custom visuals based on the Analyst's specifications. I use Gemini for most images because it's fast and cheap ($0.02-0.04 per image). For complex illustrations or specific style requirements, I switch to DALL-E.

This is part of my broader multi-model AI architecture strategy — use the cheapest model that meets quality requirements. Gemini is fine for blog headers. DALL-E is worth the extra cost for product concept visualization.

Generated images get human review too. About 15% get regenerated with adjusted prompts.

Agent 6: The Publisher (Formatting & Deployment)

The Publisher converts finished articles to markdown, adds internal links based on topic relevance, generates meta descriptions and title tags, and submits everything to the CMS.

It follows specific formatting rules: H2 for main sections, H3 for subsections, short paragraphs, bold for emphasis. It identifies opportunities for internal links by scanning existing content for related topics.

The Publisher doesn't auto-publish. It stages articles for human review and approval. But it handles all the tedious formatting work that used to take 20-30 minutes per article.

Total pipeline time from topic selection to draft ready for review: 8-12 minutes.

This isn't a single mega-prompt. It's chain-of-thought reasoning across specialized agents, each using the model best suited for its task.

Why Human Review Is Still Essential (Even at $0.10 Per Article)

The obvious question: if it's automated, why do you still review?

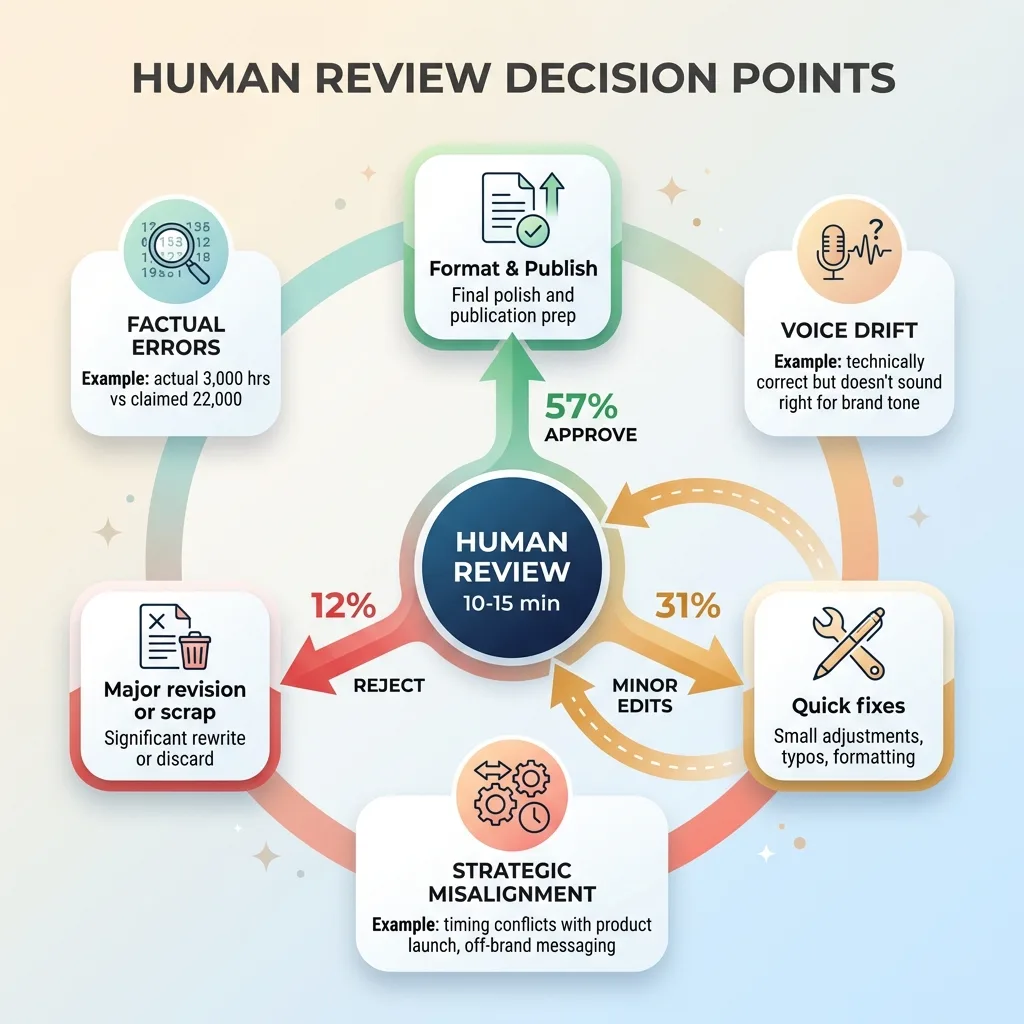

Human Review Decision Points

Human Review Decision Points

Because AI can follow patterns. It can't make judgment calls about strategy.

Example: The system generated a solid article about "AI for inventory management" — technically accurate, good writing, no obvious flaws. But we'd just launched a new product line that changed our inventory strategy. Publishing that article would have confused customers and contradicted our messaging. The AI didn't know that. I caught it in review.

Another example: An article about pricing optimization was good but poorly timed. We were in the middle of a sale. Publishing content about our AI pricing engine while running 30% off promotions would have raised questions we didn't want to answer yet. Strategic context, not quality, killed that article.

Human review catches three things the agents miss:

Factual errors that slip through. Claude Opus hallucinates less than other models, but it still happens. The Editor agent catches obvious mistakes, but subtle errors need human judgment. I killed an article that claimed we saved "22,000 hours annually" when the actual number was closer to 3,000. The AI extrapolated incorrectly from partial data.

Strategic misalignment. An article might be technically excellent but not serve the business goal. Is this the right funnel stage? Does it support our positioning? Will it attract the audience we want? The AI optimizes for the brief it received. If the brief is slightly off, the article will be slightly off.

Voice drift. Sometimes an article is technically correct but doesn't sound like me. It hits all the rules in the brand voice file but misses the underlying tone. Hard to quantify, easy to hear when you read it.

Review takes 10-15 minutes per article versus 3-4 hours to write from scratch. I'm not doing the writing. I'm doing the judgment calls only a human can make.

Rejection rate: about 12% of generated articles get sent back for major revision or scrapped entirely. Another 31% need minor edits — a paragraph rewritten, a transition smoothed, a claim softened. Only 57% publish with just formatting changes.

That's not a weakness. That's the system working correctly.

The goal isn't to eliminate humans from content creation. It's to eliminate the parts that don't require human judgment. Researching keywords, structuring outlines, writing transitions, formatting markdown — all mechanical. Strategic decisions, voice consistency, business context — all human.

The Hard Part Isn't the AI, It's Defining Your Brand Voice

Most people trying AI blog automation fail here.

They think the problem is finding the right AI tool or the perfect prompt. Wrong. The problem is: most companies don't actually know what their brand voice is beyond vague words like "professional" or "friendly."

You can't automate something you can't articulate. If you can't explain your brand voice to a human writer in specific, measurable terms, an AI won't magically figure it out.

Building this pipeline required 40+ hours of analysis before the first usable article. I documented my actual writing patterns from 50+ existing blog posts and emails. Sentence length distribution. Paragraph structure. How I introduce technical concepts. How I transition between ideas. Specific phrases I use repeatedly. Phrases I never use.

I created a negative example file — 1,200 words of AI slop patterns to avoid. "In today's rapidly evolving landscape..." "Unlock the power of..." "Leverage synergies..." Generic consultant-speak that makes me want to throw my laptop out the window.

I defined specific writing rules. Target sentence length: 12-18 words average, but vary from 5 to 30+ for rhythm. Paragraph length: 2-4 sentences max. Technical terms: define on first use, but don't over-explain to an expert audience. Credibility: use concrete numbers, acknowledge limitations, cite real examples.

The brand voice file is 3,400 words. That's longer than most of the articles it generates.

This took multiple iterations. Early drafts were technically correct but generic. They sounded like every other AI-generated business blog. I kept refining the voice file, adding specific examples, tightening the rules.

The breakthrough came when I stopped trying to describe my voice ("conversational but authoritative") and started documenting patterns ("67% of paragraphs are 2 sentences, 28% are 3 sentences, 5% are single-sentence for emphasis").

AI is excellent at consistency once you define the rules. Far better than human writers who drift over time or try to "add their own flair." But defining those rules is human work. Deep, analytical, time-consuming human work.

Most businesses skip this step. They feed the AI a few example articles and hope for the best. Then they're disappointed when the output is generic.

The AI isn't the constraint. Your ability to articulate what makes your content yours — that's the constraint.

Real Numbers: Cost, Speed, and Quality Metrics

I've run this system for 90 days in production. Here's what actually happened.

Cost & Time Comparison

Cost & Time Comparison

Cost per article: $0.08-0.12 depending on length and image complexity.

Breakdown: Claude Opus API calls run $0.04-0.06 per article. That's the Writer and Editor combined. Gemini for images adds $0.02-0.04. Infrastructure and hosting cost roughly $0.02 per article when you spread the fixed costs across volume.

Compare that to alternatives. Freelance writers charge $200-500 for a 2,000-word article. If I wrote them myself at $150/hour opportunity cost, 3-4 hours per article means $450-600 in founder time.

At $0.10 per article, I could generate 2,000-5,000 articles for the cost of one freelance piece. Obviously I don't need 5,000 articles. But the unit economics are absurd.

Speed: 8-12 minutes for the full pipeline from topic selection to draft ready for review. Compare to 3-4 hours writing manually. I can review and approve an article in 10-15 minutes. Total time investment: 20-25 minutes versus 180-240 minutes. That's a 9X time savings.

Volume: Went from 1-2 articles per month manual to 12-16 articles per month automated. I could push higher, but I'm limited by review capacity and strategic topics worth covering. There's no point publishing articles just because you can.

Quality metrics after 90 days:

Average time on page: 3:24. Industry benchmark for blog content is around 2:30. Automated articles are performing above average, though manually written articles averaged 3:51 before the pipeline. Slight quality drop, but not dramatic.

Bounce rate: 42% on automated articles versus 38% on manual articles. Close enough that I'm not concerned. Some topics naturally have higher bounce rates based on search intent.

SEO performance: 23% of automated articles now rank in top 10 for target keywords within 60 days. That's comparable to manual articles at 26%. The AI isn't hurting rankings.

Rejection rate: 12% need significant rework or get scrapped. 31% need minor edits. 57% publish with only formatting changes.

Organic traffic is up 156% since pipeline deployment. Can't attribute all of that to volume increase — quality matters too, and we made other SEO improvements during the same period. But going from 1-2 articles per month to 12-16 definitely contributed.

The system isn't perfect. It generates some mediocre content that I reject. But the hit rate is high enough that the economics work. At $0.10 per attempt and 88% usable output, the cost per published article is still only $0.11.

What This Means for Your Content Strategy

This approach works best for companies that already have a content strategy and brand voice. It accelerates execution. It doesn't create strategy.

If you're already producing educational or informational content and founder time is the bottleneck, this system makes sense. You shift from writing to reviewing — still essential, far less time-consuming.

It works for businesses that have at least 20-50 topics worth covering. You need enough volume to justify the setup investment. One-off content needs don't benefit from automation.

This doesn't work for companies that haven't defined positioning or voice yet. Garbage in, garbage out. If you don't know what your brand sounds like, automating content creation just produces garbage faster.

It doesn't work for pure thought leadership where unique insights are the entire value proposition. If your content is "here's what I uniquely believe about X," AI can't manufacture that. It can help structure and polish your ideas, but the insights have to come from you.

It doesn't work for content requiring deep interviews or original research. The system can synthesize existing information, but it can't conduct primary research or interview subject matter experts.

The ROI calculation is simple: If you're currently publishing fewer than 2 articles per month because writing is a bottleneck, and you have at least 20+ topics to cover, this system pays for itself in month one.

Setup cost: 40-60 hours to build and tune the system plus brand voice definition. That's front-loaded investment. Most of that time is documenting your voice and testing iterations until output quality is consistent.

Ongoing time: 10-15 minutes review per article. About 2 hours per month on system maintenance — updating the brand voice file, refining agent prompts, checking quality metrics.

At the brand, this is one system in a portfolio of 15+ AI implementations. It connects to our broader strategy: we use AI for product creation, SEO optimization, pricing, customer service, and inventory management. The blog pipeline feeds into the SEO system, which informs the product pipeline, which connects to pricing.

It's not a one-off automation. It's part of an integrated approach where AI systems reinforce each other.

If you're thinking about AI for content, start by answering one question: Can you articulate your brand voice in specific, measurable terms? If yes, automation makes sense. If no, spend time there first. The AI is easy. Knowing what you want it to produce — that's the hard part.

Thinking About AI for Your Business?

If this resonated, let's talk about building one for your business.

I do free 30-minute discovery calls where we look at your operations and identify where AI could actually move the needle. Not every business needs a six-agent content pipeline. Some need simpler systems. Some need more complex ones. Some aren't ready for AI automation yet.

The goal isn't to sell you on AI. It's to figure out if AI makes sense for your specific situation, and if so, what the highest-value use case is. Sometimes that's content. Sometimes it's pricing. Sometimes it's customer service. Sometimes it's "come back in six months after you've documented your processes."

Book a discovery call. Worst case, you spend 30 minutes thinking clearly about AI strategy. Best case, we identify a system that saves 3,000+ hours annually like we did at my DTC fashion brand.

Get AI insights for business leaders

Practical AI strategy from someone who built the systems — not just studied them. No spam, no fluff.

Ready to automate your growth?

Book a free 30-minute strategy call with Hodgen.AI.

Book a Strategy Call