I Overhauled 463 Blog Articles With AI in 48 Hours

AI content optimization at scale: how I audited, rewrote, and generated images for 463 blog articles in 48 hours. Real numbers, real pipeline, real results.

By Mike Hodgen

A client came to me with what looked like a healthy blog. 463 published articles. Consistent posting cadence. All the surface-level indicators of a content program that was working.

It wasn't working.

When I pulled the data, the picture was ugly. Over half the articles were 400-word filler pieces — the kind of content that checks a box on an editorial calendar but does nothing for search. Dozens had ChatGPT artifacts baked into the HTML: weird formatting tags, leftover system prompt echoes, metadata that screamed "this was generated and never edited." Hundreds had no featured images. Heading structures were inconsistent from article to article, like five different people had written them with five different style guides. Which, knowing the content farm history, was probably exactly what happened.

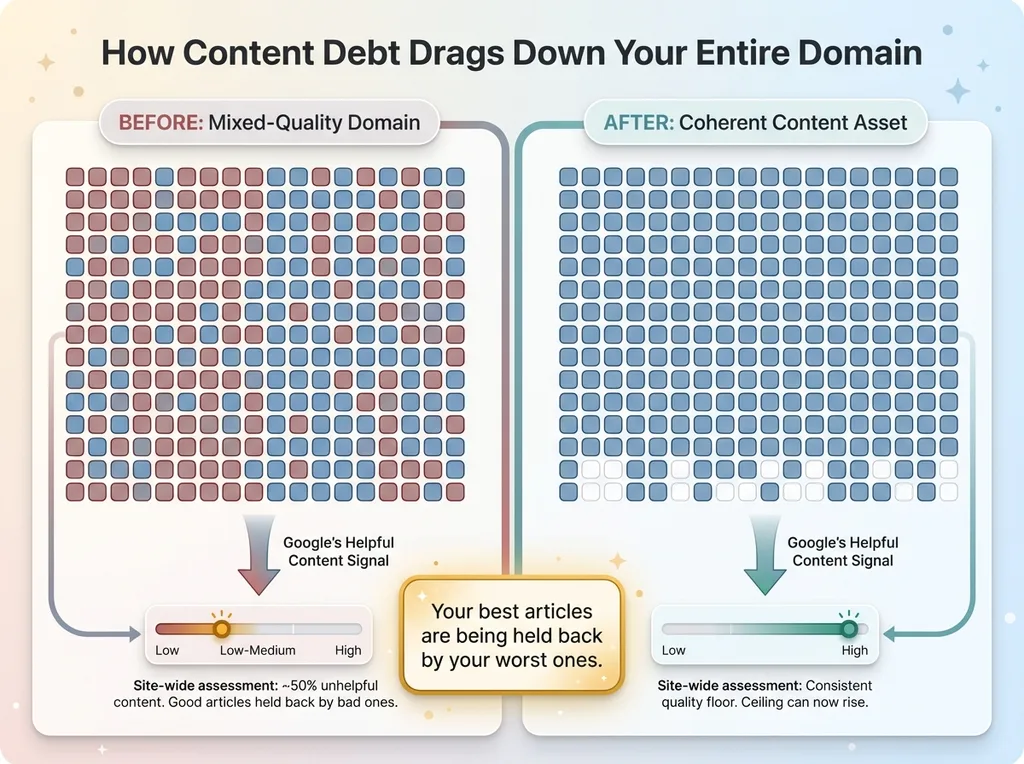

Here's the thing most people miss about AI content optimization at scale: Google's helpful content system doesn't just evaluate individual pages. It evaluates your entire domain. Having 200+ thin articles wasn't just wasting space — it was actively dragging down the ranking potential of the 200+ articles that were actually decent. The site's organic traffic was underperforming what its domain authority should have supported, and this was the reason.

This is a pattern I see constantly in ecommerce. Brands accumulate content debt the same way they accumulate technical debt. A cheap content agency here, an early ChatGPT experiment there, a few intern blog posts that never got edited. It adds up. And then one day you realize your blog isn't an asset — it's a liability.

This wasn't a cosmetic cleanup. It was a domain-level SEO intervention, and it needed to happen fast.

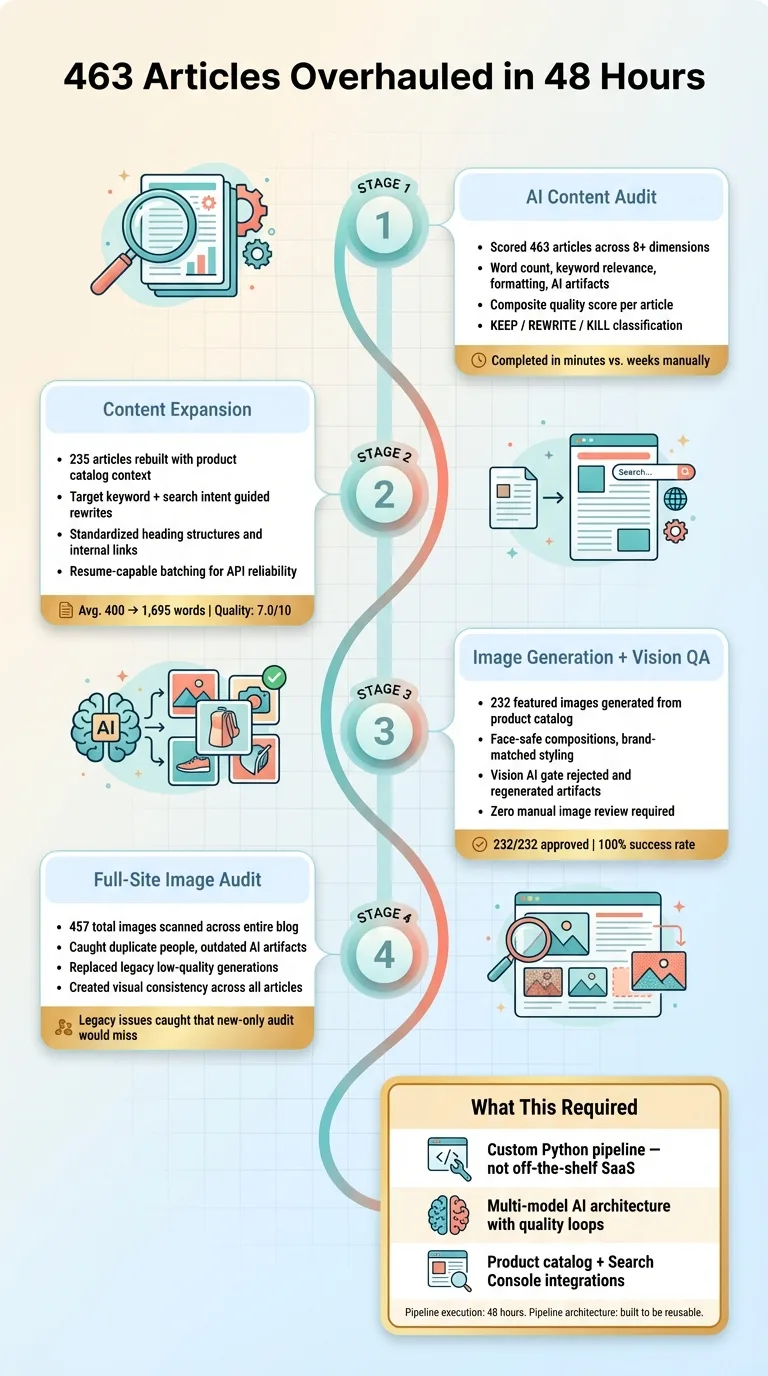

Stage 1: The AI Audit That Scored Every Article

Before touching a single article, every piece of content had to be evaluated. Not skimmed. Not spot-checked. Evaluated with consistent criteria across all 463 articles.

What the Audit Measured

The automated audit engine scored each article across multiple dimensions: word count, content depth relative to its target keyword, keyword relevance and density, formatting consistency (heading structure, paragraph length, list usage), presence of featured images, internal link density, and whether the article contained common AI artifacts. Those artifacts are more specific than you'd think — certain HTML patterns, specific filler phrases ("In conclusion," "It's worth noting that"), and formatting quirks that are telltale signs of unedited ChatGPT output.

Each article received a composite quality score. Not a pass/fail — a nuanced score that weighted these factors based on their actual impact on search performance.

The KEEP / REWRITE / KILL Framework

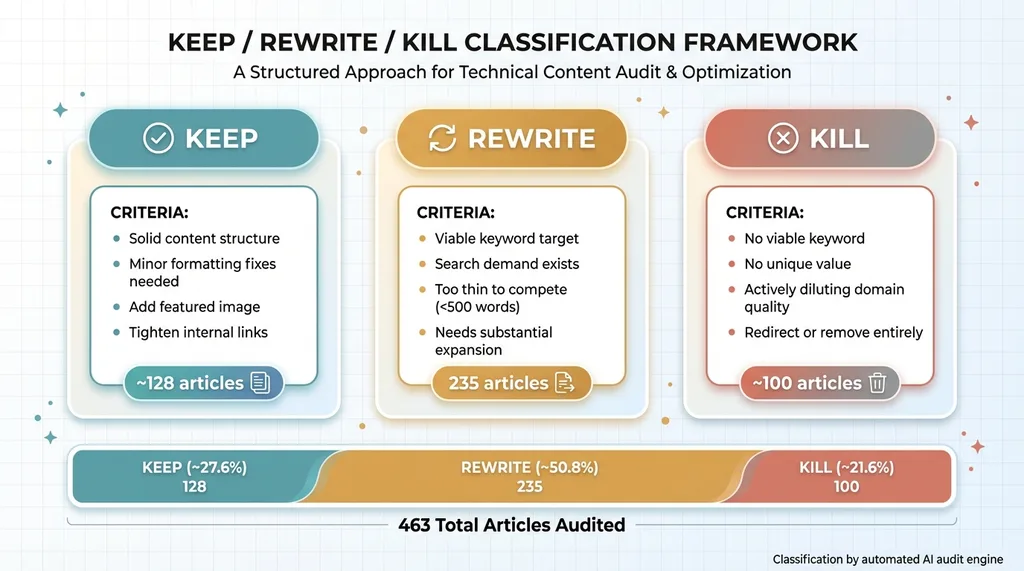

Every article was classified into one of three tiers:

KEEP / REWRITE / KILL Classification Framework

KEEP / REWRITE / KILL Classification Framework

- KEEP: Content that was solid enough to rank with minor tweaks — formatting fixes, adding a featured image, tightening internal links.

- REWRITE: The topic was viable and had search demand, but the article itself was too thin to compete. These needed substantial expansion.

- KILL: Thin filler that would never rank for anything meaningful. No viable keyword target, no unique value, actively diluting site quality.

The numbers: 235 articles flagged for full rewrite. A subset flagged for removal. The rest kept with targeted updates.

This audit took minutes. A human content strategist reading and evaluating 463 articles would need weeks. And they'd still be less consistent than an automated system applying the same criteria to every single piece.

This step is where most manual blog cleanups fail. People either keep everything because they're afraid to delete content they paid for, or they nuke too aggressively and lose articles that had real potential with some expansion. Accurate classification is the foundation. Without it, you waste resources rewriting articles that should be deleted, or you delete articles that were one good rewrite away from ranking.

Stage 2: Expanding 235 Weak Articles to 1,700 Words Average

The 235 articles flagged for rewrite averaged around 400 words. Thin. Generic. The kind of content that exists in Google's index but never shows up in anyone's search results.

How the AI Expansion Pipeline Worked

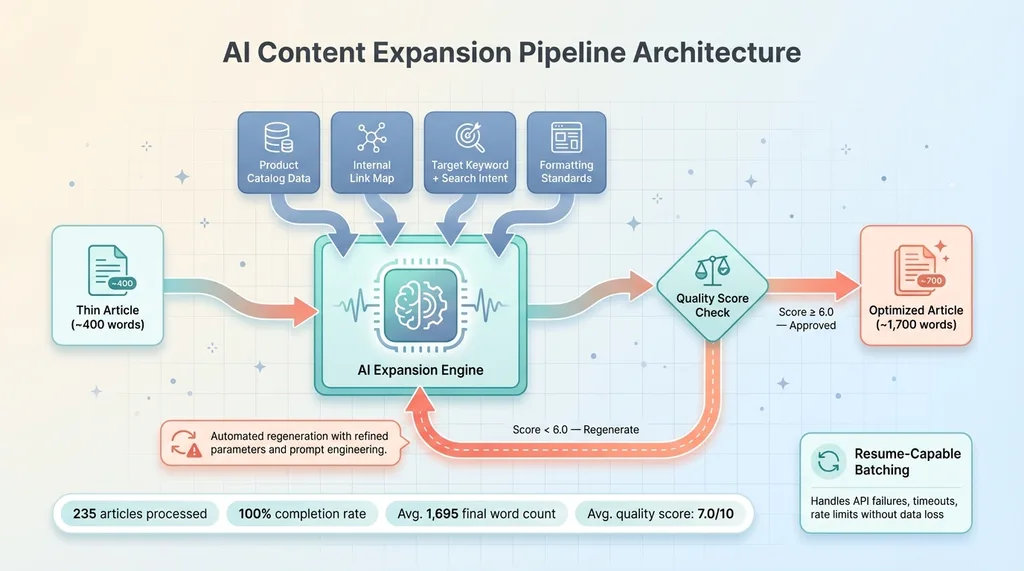

Each article was rebuilt, not padded. The expansion pipeline had context that a generic AI rewrite would never have: the client's full product catalog, the site's internal linking map, the target keyword for each article, and a formatting standard that every piece had to conform to.

AI Content Expansion Pipeline Architecture

AI Content Expansion Pipeline Architecture

The AI pulled real product references from the catalog and wove them into each article. Not generic placeholders like "check out our products" — actual product mentions that made the content commercially relevant and created natural internal links. Heading structures were standardized. Content depth was built around the target keyword's actual search intent, not just word count targets.

Results across the 235 expanded articles: 100% completion rate, average final word count of 1,695 words, average quality score of 7.0 out of 10. For context on how the AI agents for blog automation handled this pipeline architecture, I've written about that separately.

Why "Just Rewrite It" Doesn't Work at Scale

You can't tell ChatGPT to "make this article longer" and expect anything useful. That produces bloat. Filler sentences. Redundant paragraphs that say the same thing three different ways. Google's systems are specifically tuned to detect that kind of padding, and readers bounce from it instantly.

The pipeline worked because every expansion was a guided rewrite with constraints. The AI knew what products to reference, which other articles to link to, what keyword to optimize for, and what formatting standards to hit. It wasn't generating content in a vacuum — it was generating content within a system that understood the business.

One technical detail that matters more than it sounds: the pipeline was resume-capable. When you're making hundreds of API calls to process 235 articles, some calls will fail. Network timeouts, rate limits, model errors. A pipeline that can't pause and resume without losing progress will leave you with a half-finished mess and no way to pick up where you left off. Resume-capable batching isn't glamorous, but it's the difference between a reliable system and a fragile script.

Stage 3: Generating 232 Featured Images With a Quality Loop

232 articles had no featured images. That's a problem for SEO — Google increasingly prioritizes visual content in search results — and it's a problem for user experience. A blog post without a featured image looks unfinished. It reduces click-through rates from social shares and internal links. For a fashion ecommerce brand, where the product is inherently visual, it's especially costly.

The Image Generation Pipeline

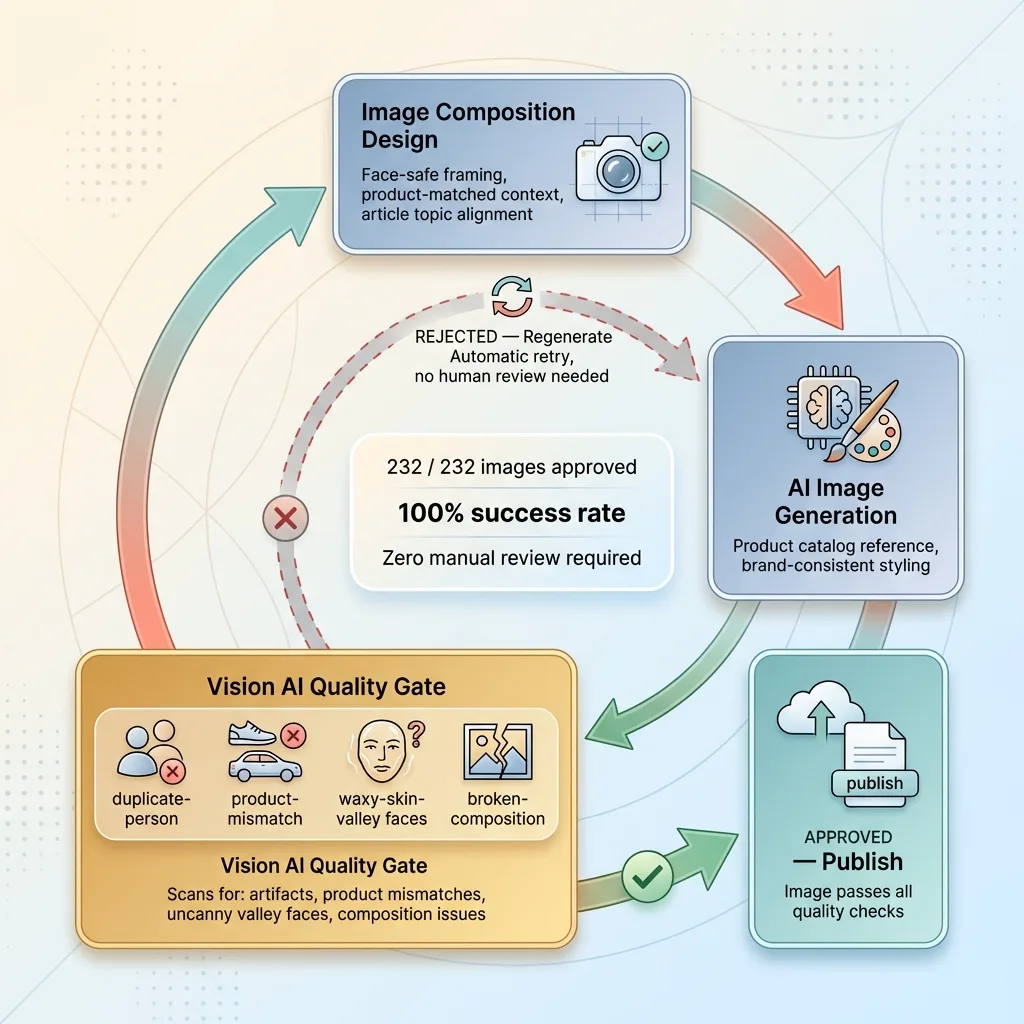

Each image was composed to reference actual products from the client's catalog, matched to the article's topic. This wasn't generic stock photography or abstract "blog header" imagery. If an article was about rave outfit styling for a specific event type, the featured image showed products the brand actually sells, in a context that matched the article's content.

The AI image optimization pipeline handled the performance side — compression, format selection, responsive sizing — as a complementary step after generation. But generation itself was the harder problem.

The Vision QA Gate

AI image generation at scale produces artifacts. It's unavoidable with current models. Duplicate people appearing in the same composition. The same outfit rendered on different people across articles, creating a visual inconsistency that sharp-eyed customers notice. Faces that trigger uncanny valley. Old-style generator artifacts that looked passable six months ago but now look obviously synthetic.

Vision QA Quality Loop for Image Generation

Vision QA Quality Loop for Image Generation

The solution was a multi-stage quality loop. First, compositions were designed to be face-safe — framing and angles that avoided close-up faces where AI still struggles most. Second, each generated image was matched against the product catalog to ensure the depicted products were accurate. Third — the critical piece — a Vision AI gate audited every single image for artifacts before approval.

That vision gate is part of a broader philosophy I've written about: building AI that rejects its own bad work. When the vision model detected problems — artifact patterns, product mismatches, composition issues — the image was automatically regenerated. No human had to review every image manually.

232 out of 232 images generated and approved. 100% success rate. That wasn't luck. It was the quality loop catching failures and regenerating them automatically until they passed.

Stage 4: The Full-Site Image Audit Nobody Thinks to Do

After generating the new images, the pipeline did something most people skip entirely: it audited every single image across the blog. All 457 of them. Not just the new ones.

Vision AI scanned every image for specific problems. Duplicate people appearing across multiple articles. The same product shown on different models inconsistently. Old generator-style shots that were obviously AI-generated by 2023 standards — the kind with that waxy skin texture and impossibly smooth backgrounds that current models have moved past. Broken image links. Images that simply didn't match their article's content.

This caught issues that had accumulated over months. Earlier images, created with less capable models, were noticeably lower quality than current-generation output. They'd been sitting there, unreviewed, degrading the visual consistency of the entire blog.

Cleaning these up created uniformity across every article. That matters for user experience — a reader clicking through multiple articles sees a coherent brand, not a patchwork of different quality levels. And it matters for the quality signals Google picks up when crawling the site. Consistency signals intentionality. A blog that looks maintained ranks better than one that looks abandoned between posts.

Most blog cleanup projects generate new images and call it done. That leaves legacy problems festering across every article that wasn't in the rewrite batch.

Why Bad Content Hurts Your Entire Domain

Everything above was execution. Here's why it matters strategically.

Google's Helpful Content System Is Site-Wide

Google launched its helpful content system in 2022 and integrated it into core ranking in 2024. The system explicitly evaluates site-wide content quality. It's not page-by-page. If Google's systems determine that a significant portion of your content isn't helpful, that assessment affects ranking for every page on the site — including the good ones.

How Content Debt Drags Down Your Entire Domain

How Content Debt Drags Down Your Entire Domain

This is the part that makes CEOs pay attention: your best articles are being held back by your worst ones.

The Math on Content Debt

If 463 articles are split roughly 50/50 between decent content and thin filler, Google's systems see a site where half the content isn't helpful. That's a site-wide quality signal. Your 200 good articles don't get evaluated in isolation — they get evaluated in the context of a domain that publishes junk half the time.

This is why the KILL tier matters as much as the REWRITE tier. Some articles shouldn't be saved. They should be redirected or removed entirely. Keeping a 400-word article that targets a keyword with zero search volume isn't preserving content — it's preserving a liability.

After the overhaul, every article on the blog had depth, proper formatting, relevant featured images, and internal links. The domain went from a mixed-quality content profile to a coherent content asset. The floor was raised, which means the ceiling can rise.

If you're running a brand with a blog that's accumulated years of content from different writers, agencies, and AI experiments, the question isn't whether you have content debt. It's how much.

What a Project Like This Actually Takes

I want to be honest about what went into this, because I think transparency builds more trust than hype.

End-to-End 48-Hour Project Timeline

End-to-End 48-Hour Project Timeline

This wasn't done with off-the-shelf tools. No SaaS product I've seen can do blog content audit automation with product-aware context, run a vision QA loop on generated images, automatically submit updated content to Google Search Console, and handle resume-capable batching across hundreds of API calls. This was custom pipeline code — Python, multi-model AI architecture, and integrations built specifically for this problem.

The 48-hour timeline was execution time for the automated pipeline. The pipeline itself took longer to build. I'm not going to pretend I spun this up in an afternoon. But once built, it's reusable. The next client with a similar problem gets the same result faster, because the architecture already exists.

What off-the-shelf tools can do: basic content audits, simple AI rewrites, stock image generation. What they can't do: AI content optimization at scale with product catalog integration, quality loops that catch and fix their own failures, and full-site visual consistency audits. That gap is where the real value lives.

If you're sitting on a blog with hundreds of articles and you're not sure which ones are helping versus hurting, that's exactly the kind of problem I build solutions for. I'm happy to walk through what this would look like for your site — no pitch deck, just an honest look at where you stand.

Thinking About What Your Blog Is Actually Doing for You?

If anything in this resonated — the content debt, the thin articles you know are sitting there, the nagging sense that your blog should be performing better than it is — let's talk. I do free 30-minute discovery calls where we look at your specific situation and identify whether a cleanup like this would actually move the needle for your domain.

No slides. No generic recommendations. Just a conversation about what's real.

Get AI insights for business leaders

Practical AI strategy from someone who built the systems — not just studied them. No spam, no fluff.

Ready to automate your growth?

Book a free 30-minute strategy call with Hodgen.AI.

Book a Strategy Call