72 Commits, 10 Projects, One Week: AI Development Velocity

One operator, 72 commits across 10 projects in 5 days. A real look at AI development velocity — what shipped, what compounded, and what AI still can't do.

By Mike Hodgen

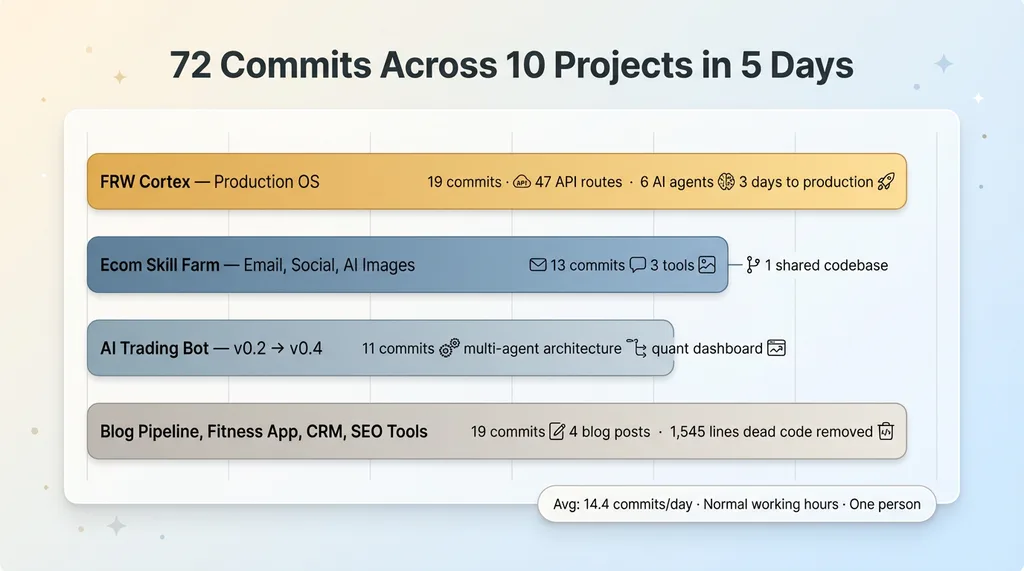

72 commits. 10 distinct projects. 5 working days. One person.

I'm sharing those numbers not as a brag but as a data point. This is what AI development velocity actually looks like when you've invested in the right stack, the right patterns, and the right judgment about what to build. And before you picture some Red Bull-fueled marathon: I worked normal hours. I picked up my kids from school. I had dinner with my wife.

This isn't about grinding. It's about what happens when AI removes the friction between deciding to build something and having it running in production.

That averages out to roughly 14 commits per day across completely different domains — crypto trading, warehouse management, email marketing, fitness apps, CRM systems, SEO tooling, and content publishing. Not prototypes. Not demos. Shipped, running, production software.

I'm writing this for the skeptical CEO who's been told AI-assisted software development is transformative but has only seen slide decks and proof-of-concepts that never went anywhere. Everything in this article actually shipped. I've got the commit logs, the production URLs, and the business results to back it up.

Here's what actually happened, the patterns that made it possible, and the honest limits I ran into.

What Actually Shipped in 5 Days

Weekly Output Breakdown by Project

Weekly Output Breakdown by Project

AI Trading Bot: v0.2 to v0.4 (11 commits)

I started the week with a basic crypto trading bot sitting at v0.2 — functional structure, some backtesting, but nothing close to what I'd trust with real capital. By Friday it was at v0.4 with multi-agent architecture: Grok handling real-time sentiment analysis, prediction market integration for signal confirmation, statistical arbitrage logic, and a full quant dashboard for monitoring everything.

I wrote about building the initial AI trading bot a few weeks earlier. That first version took a solid weekend. Getting from v0.2 to v0.4 — a much larger jump in complexity — took 11 commits spread across the week, mostly in focused 1-2 hour sessions.

The point here isn't the trading bot itself. It's that iterating on complex multi-agent systems at this pace would normally require a small engineering team. I'm one person with Claude Code and a clear architectural vision.

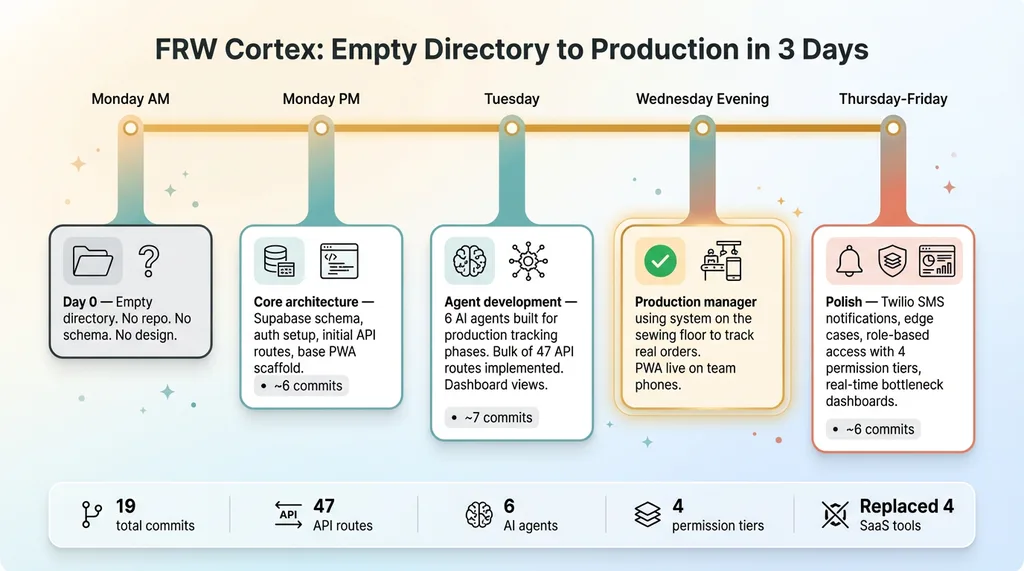

Cortex: Zero to Production OS in 3 Days (19 commits)

This was the showpiece of the week. Cortex went from literally nothing — no repo, no schema, no design — to a full 5-phase warehouse management system running in production.

Cortex: Zero to Production Timeline

Cortex: Zero to Production Timeline

What shipped: 6 AI agents handling different aspects of production tracking. 47 API routes. A Progressive Web App so my warehouse team can use it on their phones. Twilio SMS notifications for phase transitions and alerts. Role-based access control with four permission tiers. Real-time dashboards showing production flow, bottlenecks, and daily output.

19 commits over roughly 3 days.

This replaced manual tracking on whiteboards and spreadsheets, plus eliminated multiple SaaS subscriptions we were stitching together. You can read the full story of the Production OS that replaced four SaaS tools — it covers the architecture decisions and the business impact in detail.

I want to be specific about the timeline because it matters. Monday morning: empty directory. Wednesday evening: my production manager was using it to track real orders through our sewing floor. Thursday and Friday: polish, edge cases, notifications. That's not a hackathon prototype. That's a production system serving a real team.

Ecom Skill Farm: Email, Social, and AI Image Generation (13 commits)

This cluster was three distinct tools that share a codebase:

Email Campaign Engine — Not another Mailchimp clone. A system that builds campaign sequences from product data, customer segments, and seasonal context. It generates draft campaigns that I review and refine, then schedules them through our existing ESP. We've been meaning to build this for over a year. It always got deprioritized because it wasn't on fire.

Social Media Scout — Competitive intelligence tooling that monitors competitor social accounts, tracks engagement patterns, and surfaces content strategies worth testing. The kind of thing a social media agency would charge $2K/month for.

AI Lifestyle Image Generation — Product photography is expensive and slow. This system takes our product shots and generates lifestyle imagery using Gemini, with style controls tuned to the brand aesthetic.

13 commits. This is the category of internal tooling that never gets built because it's important but not urgent. AI makes it buildable in the margins between larger projects. An hour here, ninety minutes there. It adds up.

Everything Else: Blogs, Fitness App, CRM, SEO Tools (19 commits)

The remaining 19 commits across the week were the "small stuff" that normally piles up as tech debt and maintenance backlog:

4 blog articles published through the automated blog pipeline to hodgen.ai. Research, drafting, SEO optimization, internal linking, publishing — the whole workflow that used to take 3-4 hours per article now takes about 40 minutes of my focused attention.

Brad Hills fitness app — A client project. Not glamorous, but 1,545 lines of dead code removed and significant tech debt cleaned up in 4 commits. The kind of cleanup that developers avoid because it's tedious and thankless. Claude Code rips through dead code identification and safe removal like nothing I've seen.

DTC brand toolkit updates — Improvements to our Google Search Console dashboard, pricing automation refinements across our 564-product catalog, and blog rewriter enhancements.

Flowline CRM — Production bug fixes and a marketing landing page for the product.

I'm listing the small stuff deliberately. AI building at scale isn't just about the big flashy projects. It makes maintenance, polish, and cleanup achievable too. The unglamorous work that keeps systems healthy? It actually gets done now because the activation energy dropped from "block out an afternoon" to "handle it in 20 minutes."

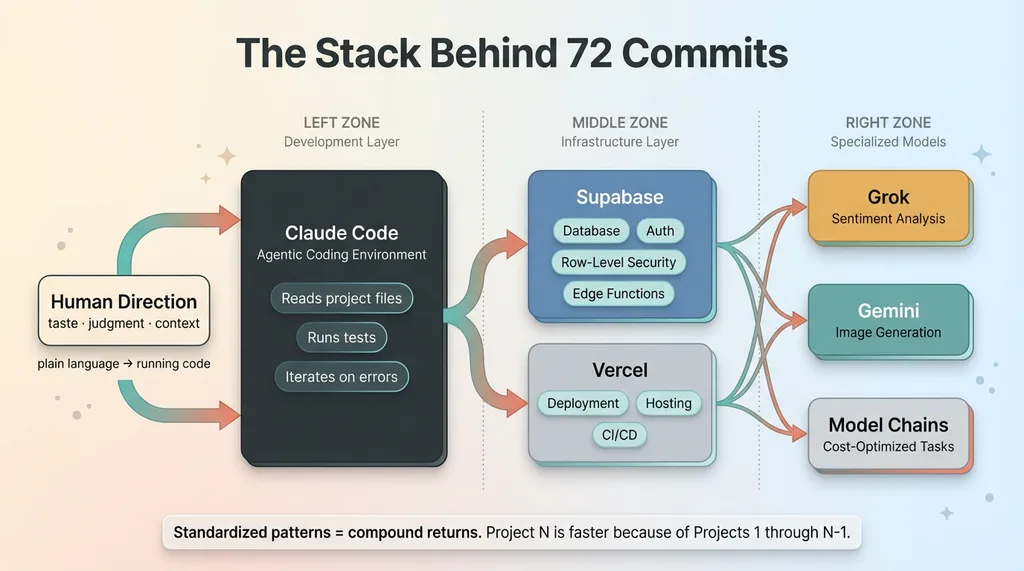

The Stack That Makes This Possible

Every project that week used the same core pattern: Claude for reasoning and code generation, Supabase for data and auth, Vercel for deployment, and frontier models for specialized tasks — Grok for sentiment analysis, Gemini for image generation, and various models chained together for cost efficiency.

The AI Development Stack Architecture

The AI Development Stack Architecture

I've written about this multi-model architecture in detail. The short version: standardizing on a stack that AI understands deeply is a force multiplier. When Claude has seen your Supabase schema patterns a hundred times, it doesn't need hand-holding on the hundred-and-first. It knows your naming conventions, your row-level security patterns, your edge function structure.

The tooling distinction matters enormously. I'm not copy-pasting code from ChatGPT into VS Code. Claude Code is the primary development environment — actual agentic coding where the AI reads your files, understands your project structure, runs tests, encounters errors, and iterates on fixes. This is the difference between "using AI" and building with AI as a true pair partner.

In practice it feels like this: I describe what I want in plain language, Claude writes the implementation, I review the output and adjust direction. The cycle time between idea and running code is minutes, not hours. Some of those 47 API routes in Cortex went from "I need an endpoint that does X" to tested and deployed in under 10 minutes.

That's not magic. It's the result of deliberate infrastructure choices made months ago paying compound interest.

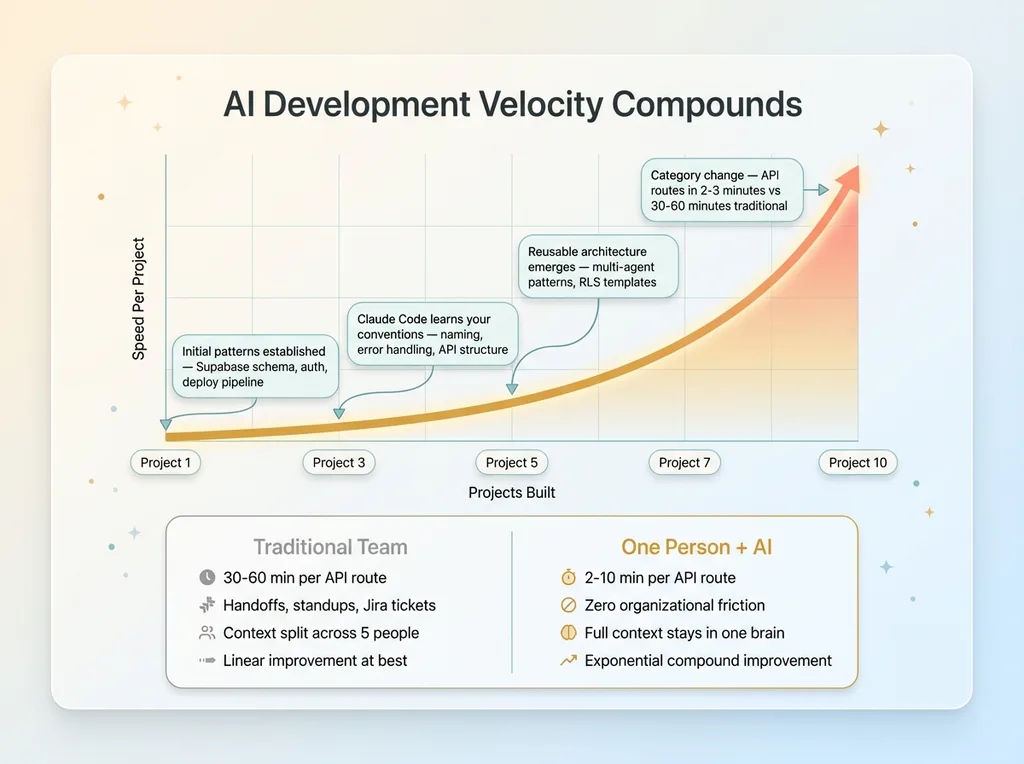

The Compound Effect: Why Project 10 Is Faster Than Project 1

This is the insight most people miss about AI development velocity. It's not linear. It compounds.

The Compound Velocity Effect

The Compound Velocity Effect

Every project creates reusable patterns. The Supabase row-level security setup I built for Cortex? Parts of that pattern informed the CRM auth system later that same week. The multi-agent architecture from the trading bot? That thinking directly shaped how I structured the 6 AI agents in the Production OS. The deployment pipeline I refined on the fitness app? It made every subsequent deploy smoother.

Claude Code's project memory is a real factor here. It remembers your conventions, your error handling patterns, your deployment workflow, your preference for how you structure API responses. Building project N is measurably faster because projects 1 through N-1 created scaffolding — both in actual code and in the AI's understanding of how you work.

This is why the one person AI team framing matters. A solo operator with deep context across all projects can move faster than a 5-person team dealing with handoffs, standups, context switching, and knowledge silos. Not because I'm smarter — because the context never leaves the room. There's no ticket to write, no Jira board to update, no pull request to wait on. Idea to implementation to deployment with zero organizational friction.

Here's a concrete example. Those 47 API routes in Cortex — each one handles data validation, authentication, error handling, and response formatting. Individually, a traditional developer might spend 30-60 minutes per route. With established patterns and Claude Code understanding my conventions, we were generating and testing routes in 5-10 minute cycles. Some simpler CRUD routes were done in 2-3 minutes. That's not a percentage improvement. That's a category change.

And the effect keeps compounding. Next week's projects will be faster than this week's because of what I built and learned these five days. The velocity curve bends upward.

What AI Still Can't Do (And Why That's the Whole Point)

Here's where I need to be honest, because this matters more than the commit numbers.

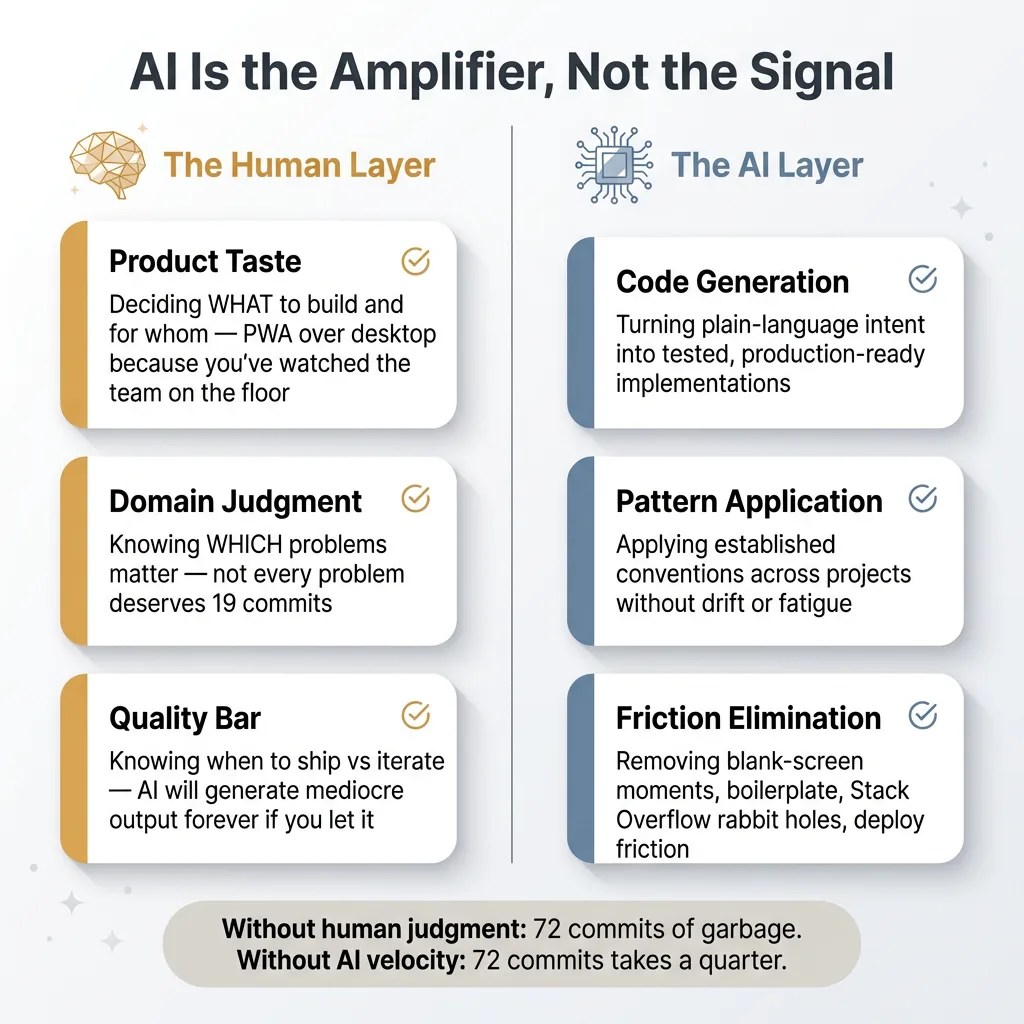

Human vs AI Roles in High-Velocity Development

Human vs AI Roles in High-Velocity Development

AI didn't decide to build a Production OS for my brand's warehouse. I did. I made that call because I've watched my team struggle with manual tracking for months. I know exactly which phases of our production process cause bottlenecks. I know which information my production manager needs at 7 AM versus which reports I need at end of week. That context came from running the business, not from a prompt.

AI didn't choose which trading strategies to implement. I did, based on market thesis, risk tolerance, and my read on where crypto markets are heading. AI didn't write the email campaign copy that resonates with our customers. It generated drafts. I shaped them because I know our audience — I've been selling to them for years.

Three things AI can't replace:

- Product taste — Knowing what to build and for whom. The judgment that says "my warehouse team needs a PWA, not a desktop app" because I've seen them working on the floor.

- Domain judgment — Understanding which problems actually matter to your business. Not every problem is worth solving. Knowing which ones are worth 19 commits in a week requires understanding the business deeply.

- Quality bar — Knowing when something is good enough to ship versus when it needs another pass. AI will happily generate mediocre output forever if you let it.

AI is the amplifier, not the signal. A week like this is only possible because I had a clear backlog of high-value work and the judgment to prioritize it. Hand this same toolkit to someone without domain expertise and you get 72 commits of garbage.

This is why "just use AI" isn't a strategy. The human layer — taste, judgment, context — is what makes velocity meaningful instead of just fast.

The Gap Is Widening Every Week

The point of this article isn't that I'm uniquely productive. It's that the gap between companies building with AI and companies still doing everything manually is widening. Not gradually. Exponentially.

A week like this from one operator represents what might take a small traditional dev team a full quarter. Not because AI writes perfect code — it doesn't — but because it eliminates the dead time. The blank-screen moments. The Stack Overflow rabbit holes. The boilerplate. The deployment friction. The context switching between languages and frameworks. All of it compressed or eliminated.

For the CEO reading this: you don't need 72 commits across 10 projects. You need ONE of these projects — the right one for your business — built and running. The internal tool that eliminates 20 hours of manual work per week. The pricing system that adjusts while you sleep. The production tracking that replaces your spreadsheet nightmare.

The question isn't whether AI development velocity is real. You're reading the commit logs. The question is whether you're capturing it or watching your competitors capture it first.

I spent this week building for my own businesses and clients. What would a week focused on your operation produce?

If you want to explore that, let's have a conversation.

Get AI insights for business leaders

Practical AI strategy from someone who built the systems — not just studied them. No spam, no fluff.

Ready to automate your growth?

Book a free 30-minute strategy call with Hodgen.AI.

Book a Strategy Call